A/B Testing in Web Applications: Tips for Product Managers to Drive Better Decisions

In the rapidly evolving digital marketplace, product managers consistently face the challenge of making decisions that can significantly influence user engagement and business metrics. With myriad features and design choices available, selecting the best path forward isn’t always intuitive. A/B testing, a method of comparing two versions of a web page or app against each other to determine which one performs better, has emerged as a powerful tool in a product manager’s arsenal. By evaluating variations of your web application through A/B testing, product managers can objectively identify which options will resonate most with users and drive impactful decisions.

What is A/B Testing?

A/B testing, sometimes referred to as split testing, is the process of showing two variants, A and B, to different segments of visitors at the same time and comparing which variant drives more conversions or other predefined goals. This experimentation method removes guesswork and fills the gap between theoretical designs and the real-world user experience, providing data-driven insights that can improve a product’s performance.

Why Product Managers Should Care About A/B Testing

Product managers wear various hats—strategist, designer, analyst, and even psychologist. A/B testing gives them the quantitative evidence needed to make informed decisions across these areas. Instead of relying purely on intuition or stakeholder opinions, A/B testing equips product managers with the data to back up their choices. This structured approach eliminates biases and focuses on what users genuinely want.

Key Steps in the A/B Testing Process

1. Define Clear Goals

Every A/B test starts with a clear understanding of what you want to achieve. Whether it’s increasing click-through rates, improving user engagement, or enhancing user retention, having a precise goal will steer your testing strategy in the right direction. Define specific, measurable metrics that indicate success for your test.

2. Hypothesize and Formulate Variations

Once you have a goal, develop hypotheses about changes that could lead to your desired improvement. These hypotheses might concern different design elements, layout changes, or even copy variations. Create your Version A (control) and Version B (variant), ensuring that the only significant differences are those being tested.

3. Select the Right Audience Segment

Deciding who will see your variations is crucial, as different segments of your user base might react differently. Whether you focus on new visitors, repeat users, or another segment, understanding your audience ensures your insights are relevant and actionable.

4. Run the Test

Launch the A/B test, directing 50% of the traffic to the control and the remaining 50% to the variant. Make sure the test runs long enough to gather sufficient data, avoiding prematurely ending the test without statistical significance.

5. Analyze the Results

Once the test concludes, use statistical analysis to determine which version performed better. Look at the uplift in metrics against a baseline and evaluate if the improvement is significant. Analyzing user behavior data can also reveal insights into how and why users are engaging differently with each variant.

6. Implement the Winning Variation

If one variation provides a clear lift in your desired metrics, consider rolling out that change to all users. However, if results are inconclusive, examine the data deeper to uncover hidden trends or iterate with further testing.

Best Practices for Effective A/B Testing

Start Simple

Especially for beginners, it’s best to start with simple tests such as button colors, calls-to-action (CTAs), or headline variations. These elements are easy to change and can often yield quick insights that inform more complex tests.

Prioritize High-Impact Areas

Focus your efforts on areas of your application that have the most significant impact on your user’s journey, such as the homepage, sign-up page, or checkout process. Small changes in these areas can lead to substantial improvements.

Consider User Psychology

Understanding cognitive biases and user psychology can form the basis for meaningful tests. Concepts like scarcity, social proof, and anchoring can be powerful drivers of user behavior and can guide your hypotheses.

Avoid Testing Too Many Changes at Once

Testing too many changes simultaneously can complicate the analysis and dilute the results. Keep tests focused to attribute changes accurately to test outputs.

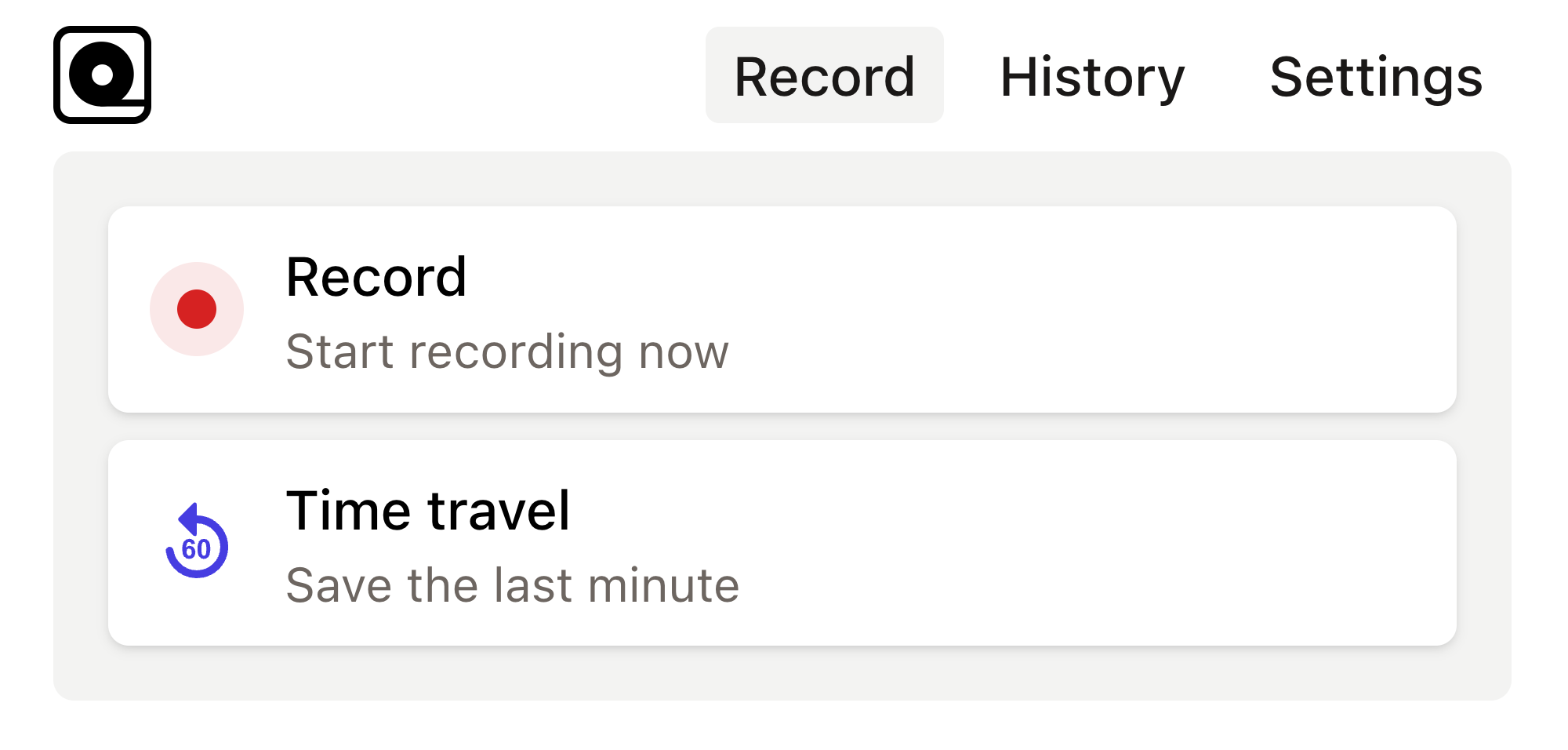

Use Robust Tools and Platforms

Employ comprehensive A/B testing tools such as Optimizely, Google Optimize, or VWO, which provide robust features to design, run, and analyze tests efficiently.

Document Every Test

Record all test details, including hypotheses, user segments, duration, and results. This documentation can support learning, backtracking, and building a robust testing culture within your team.

Challenges in A/B Testing and How to Overcome Them

While A/B testing is a valuable tool, it does come with its own set of challenges, such as running tests that are too short, dealing with low traffic volumes, or encountering sample pollution. To overcome these challenges:

-

Ensure Adequate Sample Size: Incorporate statistics to determine the required sample size for reliable results. Use sample size calculators and ensure your tests run long enough to achieve statistical significance.

-

Implement No-Tracking Zones: Avoid sample pollution by ensuring users remain in the same group (control/variant) throughout their interactions in the test period. This can be achieved with cookies or session tracking.

-

Account for External Factors: Recognize and adjust for external influences like seasonality or technological changes within your app that might skew results.

The Future Outlook of A/B Testing in Web Applications

With the increasing importance of AI and machine learning, the future of A/B testing looks more promising than ever. Advanced algorithms are being integrated to offer predictive insights and automate some manual aspects of A/B testing, further enriching decision-making capabilities. Additionally, as privacy regulations evolve, ensuring user consent while respecting data usage will become paramount. Product managers should stay informed on how these technological and regulatory factors will shape testing methodologies to strategically leverage its full potential.

Conclusion

A/B testing empowers product managers to transform abstract ideas into quantifiable outcomes that directly enhance the user experience. By adopting a methodical approach to testing variations, product managers can drive better decisions grounded in concrete evidence. While obstacles exist, with careful planning and best practices, A/B testing remains a cornerstone methodology for optimizing web applications that resonate with users. Whether you’re launching a startup or managing an established product, integrating A/B testing into your strategy will lead to sustained improvement and success in the digital landscape.

Read more

In today's digitally driven world, data is a critical asset for businesses. Read more

In the current digital landscape, understanding where your website traffic is coming from is pivotal for devising effective marketing strategies and improving online presence. Read more

In the fast-paced tech world, web application developers often find themselves in the midst of intense competition. Read more

In the fast-paced world of web app development, product managers frequently face the challenge of transitioning tangible business goals into actionable development workflows. Read more

Running an e-commerce business comes with its own set of challenges. Read more

In the world of digital marketing and website development, data is king. Read more

In the rapidly evolving digital landscape, understanding your audience is the key to creating successful marketing strategies. Read more

In the rapidly evolving landscape of technology, Software as a Service (SaaS) and web-based products have emerged as essential tools for businesses and consumers alike. Read more

In the bustling world of web applications, businesses often find themselves drowning in data. Read more

In the fast-paced digital world, understanding how well your website is performing is crucial for driving success. Read more